nmT5: Is Parallel Data Still Relevant for Pre-training Massively Multilingual Language Models?

Abstract

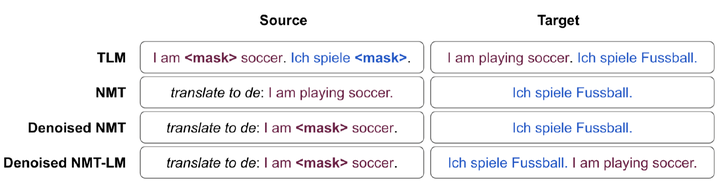

nmT5 studies the role of parallel data in pre-training and adaptation of massively multilingual language models.

Type

Publication

Proceedings of the Annual Meeting of the Association for Computational Linguistics